Lessons from a Quadratic Voting Experiment: The Sunlight Music Awards

Jul 14, 2021 · 4756 words · 10-minute read

Quadratic Voting (QV) was popularized by Eric A. Posner and E. Glen Weyl in the 2010s as a mechanism to incorporate intense minority preferences.1 Voters are given “credits” or “tokens” to allocate between candidates. They could cast multiple votes for the same candidate, but the cost in terms of credits is the square of the number of votes one wishes to cast.2 This way, voters get to express not only the direction but also the strength of their preferences, paying a higher marginal cost for each additional vote.

While there have been many QV applications (e.g. on a crypto forum, in the Colorado State House, in an online academic study), I wanted to design a QV experiment myself so that 1) voting would produce a substantively meaningful outcome in the real world, 2) the data would be made public for analysis, and 3) I get to “look under the hood” and personally experience any challenges posed by QV, logistical or otherwise.

In 2020, with the help of close to 100 strangers on the Internet, I organized a music award using QV that highlighted the best songs, albums, and new artists of 2019 in the Chinese-speaking world. The award is substantively meaningful because 1) the Chinese art and entertainment scene suffers from rampant payola, and 2) many musicians are barred from competition for geopolitical reasons. In other words, there is no credible music award in the second largest entertainment market in the world, and I wanted to create one that’s fair, transparent, professional, and inclusive.

In the end, my experience running the experiment far exceeded expectations. The genre-, language-, and nationality-agnostic award,3 which I named Sunlight (阳光之下), attracted dedicated music critics who collectively spent more than 1,000 hours nominating and judging. Winners of the competition were featured in a social media post that garnered more than two million views; musicians applauded our project and shared it with their fans; editors at one of China’s largest digital media outlets helped design our poster (shown below); senior executives at some of China’s largest streaming platforms and record labels reached out… Most importantly, I experienced first-hand some of the properties and flaws of quadratic voting.

In this post, I will summarize the lessons I learned, primarily from the perspective of collective decision-making. If you’re curious about the music itself, please consult my posts in Chinese and the YouTube playlist of award winners. Data from the nomination and voting rounds are available in this Github repo, as are logs of all minor rule changes I had to make along the way.

Roadmap

This post is broken down into four sections:

- Experimental design: Mechanisms to screen out negligent judges; guards against collusion; mechanisms to promote and test the judges' understanding of the voting rule;

- Voting outcomes: Qualitative summary of different winner types (“vanilla” vs. “edgy”); simulation of winners under alternative voting systems; “expensive” songs and social identity;

- Flaws in the QV experiment and recommendations for future voting: The credit:candidate radio; the nomination process; tractability;

- Meta-thoughts on creating and maintaining an online community: The recruitment funnel; the reach.

Experimental Design

Judge Recruitment and Demographics

In July and August 2020, I recruited 54 volunteer judges by searching music-related keywords on Chinese social media.4 I pitched to them the idea of participating in a transparent, innovative award that would honor artistic achievements over popularity. (I’ll discuss the recruitment funnel in a later section.) Below is the judges' demographic information I collected in the preliminary survey:

- Decades of birth: 1970s to 2000s

- Provinces of birth: 24 (out of 34 in China)

- Current location: 26 cities in 4 countries

- % Female:5 50

- % with Bachelor’s degrees or above: 83

- Median spending on music in 2019: USD 500 (streaming subscriptions, live events, etc.)

- Domain knowledge:

- % Proficient in an instrument: 44

- % Ever involved music composition, production, or distribution: 30

- % Ever worked full-time in music-related industries: 28

- % Took college-level music classes: 24

The award consisted of two rounds: nomination and voting. In both rounds, judges were told to select works of art or individuals “worthy of being recommended to posterity.”

Sixteen of the more professional judges (e.g. radio show hosts, full-time music critics) were invited to each nominate ten songs, five albums, and three new artists of the year. The list of nominees was then shared with all 54 voting judges, whose task was to allocate 100 credits in each of the three award categories by the quadratic voting rule.

Given that one of Sunlight’s primary objectives was to highlight works of great artistic value, we thought it appropriate to include multiple winners on our promotion poster. Below are the number of nominees and the number of winners we set out to feature in each category.

| Award Category | # Nominees | # Winners |

|---|---|---|

| Songs of the Year | 121 | 20 |

| Albums of the Year | 50 | 5 |

| New Artists of the Year | 31 | 3 |

The aforementioned rules were clearly communicated to judges before voting began, and I tied my hands by creating a real-time log in Sunlight’s Github repo about all rule changes.

Attentiveness of Judges

I define a negligent judge as someone who votes without having listened to all of the nominees. If there’s a sufficiently large number of negligent judges in our pool, winning would be highly correlated with the artists' name recognition, and Sunlight wouldn’t be creating information the search engine indices didn’t already know.

Thus, to ensure the attentiveness of our judges, I sent out the online ballot a month after revealing the list nominees, and all judges had to complete three 15-minute long online surveys spaced out over the course of three months. A friend dubbed my approach the “reverse Nigerian prince” – making the process sufficiently complex and protracted so that only the most serious remain.5

Collusion

I worried about collusion for two reasons:

- The number of judges was small (<100), so the chance that a bloc of three or five judges could supply the pivotal votes was extremely high;

- The incentive to collude is stronger under QV than under other voting systems (approval, one-person-one-vote, ranked choice voting) – if A wanted to cast 10 votes for Candidate 1, he could either spend all of his 100 credits or collude with nine other judges, in which case they’d collectively spend only 10 credits on Candidate 1.

To guard against collusion, I communicated with each of the judges privately. The optimal setup for this mode of communication would be email – I could bcc everyone the survey links and respond to individual inquiries. However, since China jumped directly from pen-and-paper to mobile, people do not use email. I ended up creating a spreadsheet of the judges' online aliases and talked to each of them on their preferred social media platform. It was an unfortunate compromise I had to make for the experiment, and I would definitely have to find a more efficient setup if I were to scale up the voting in future years.

Understanding of QV

To ensure that judges understood the new and complex voting rule, I included a toy example in the preliminary survey and quizzed the judges on it. Three out of the 54 judges came back with more questions – I’ll discuss the tractability of QV in a later section.

Voting Outcomes

Vanilla vs. Edgy Work

To me, the most interesting finding of the experiment is the contrast between what I call “vanilla” and “edgy” work.

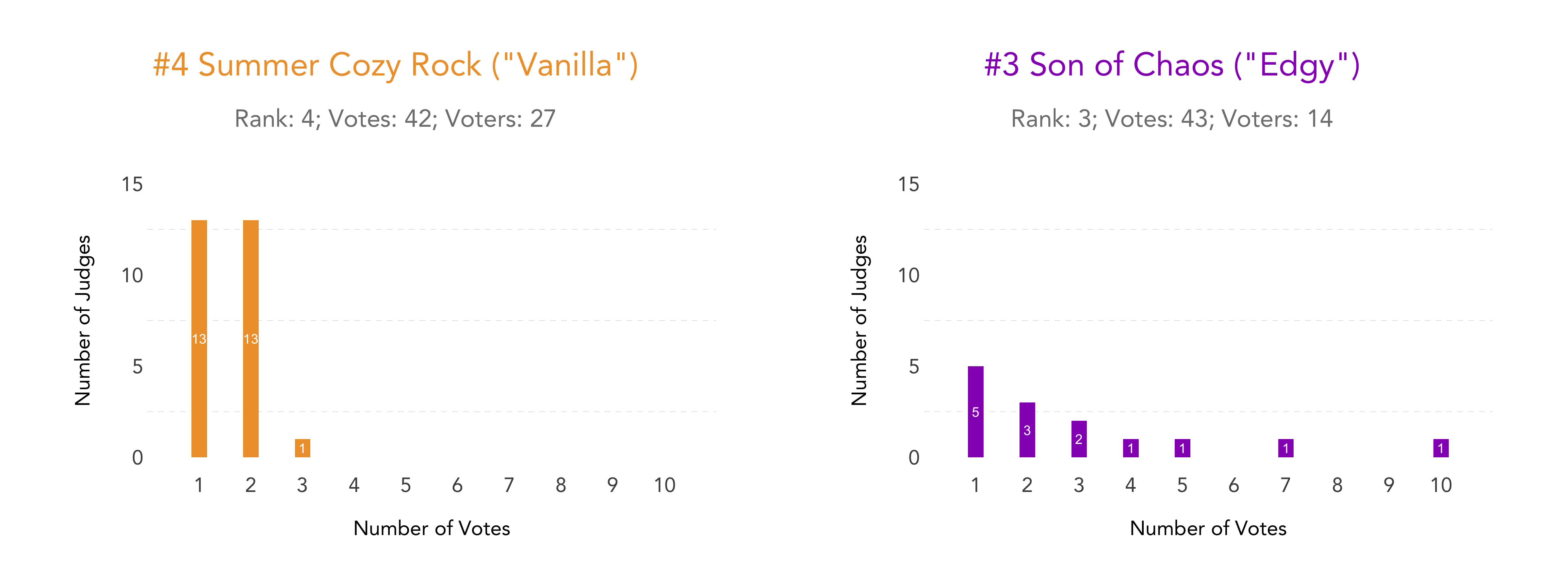

Below is the vote distribution of two similarly ranked songs. Summer Cozy Rock and Son of Chaos received 42 and 43 total votes respectively and are thus ranked one spot apart (#4 and #3). However, the former has nearly twice as many supporters as the latter: 27 judges cast nonzero votes for Summer Cozy Rock, but almost everyone gave one or two votes. In contrast, only 14 judges cast nonzero votes for Son of Chaos, but many spent large numbers of credits.

For lack of better words, I call the type of work with breadth but little depth of support “vanilla” (no negative connotation intended) and that with deep but narrow support “edgy.” It’s a testament to the strength of QV that both types of songs could come out victorious under this voting system, and I’ll simulate the winners under alternative voting rules in a later section.

The nice thing about testing QV using a music award is that we can hear the difference between the different types of winners. Here’s the Vanilla song Summer Cozy Rock:

The melody is catchy, the rhythm easily places you on a beach, and the singing voice, when it’s present at all, never overpowers. Lyrically, the song follows the Japanese trend of singing foreign words with native-language grammar:

Upset / Cold, wet / I just gonna feel alright (sic)

This time / Love bites / Wild roses in my hand

It really is the least offensive song you could put on for a large event or for a relaxing day outdoors.

The Edgy song Son of Chaos, however, can’t be more different:

Instrumentally, the jazz beat and heavy Bob Dylan influences already sound foreign to a Chinese ear. What’s more, the singer-songwriter adopts an unusual singing voice, elongating vowels and almost howling in places. The peculiar singing style no doubt amplifies the political message of the song:

He pushes the window open and wonders whether he should jump off

Outside, the incessant stream of horses and carriages (i.e. heavy traffic) tells you to follow the orders of the era

The winners draw up the rules, so as to make the winners keep on winning and the losers keep on losing

Some starve and suffer, others numbed in their comfort

In the next two sections, I’ll discuss the implications of this “vanilla” vs. “edgy” distinction, first from the perspective of the artist/candidate and then from the perspective of the event organizer/social planner.

Better to be Loved by Few or Liked by Many?

From a product perspective, we can either take our type (“vanilla” vs. “edgy”) as a given and maximize profits or choose our type based on which maximizes profits.

Setting Prices

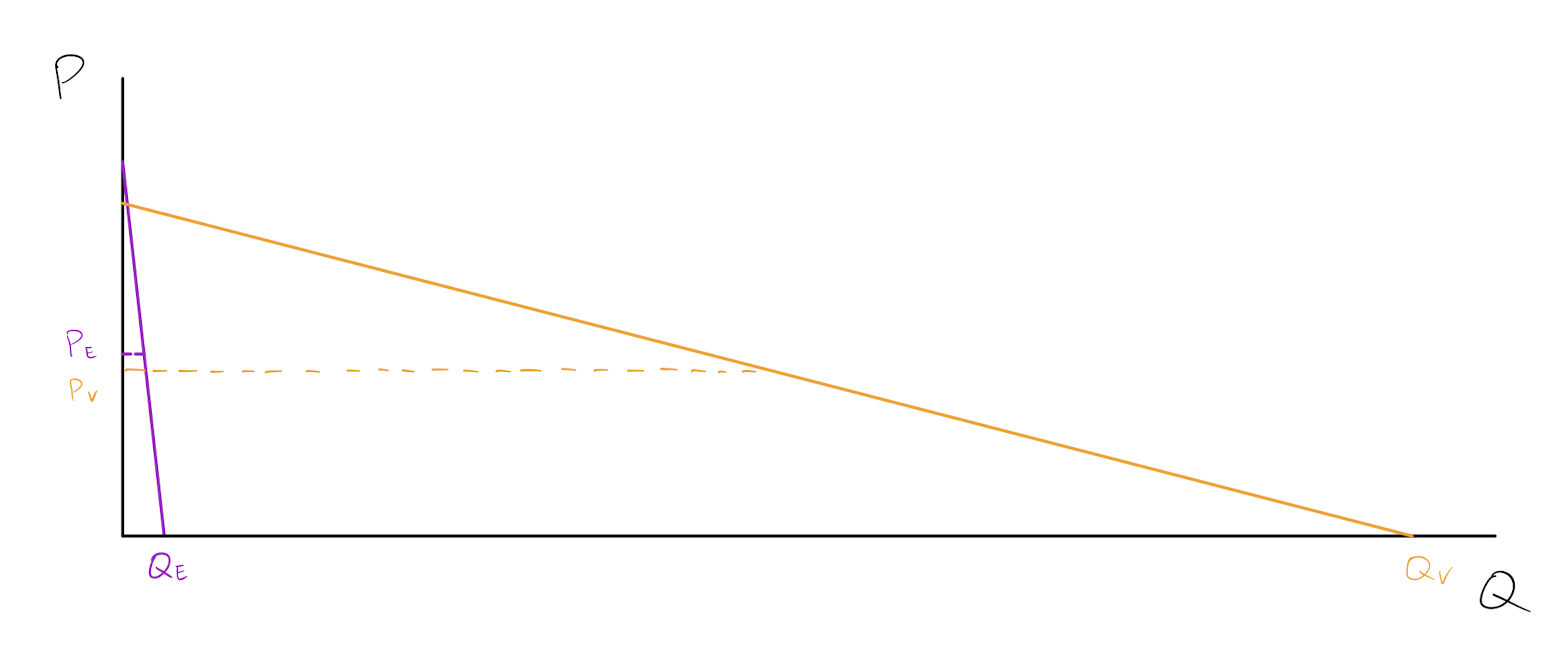

If we take our type as a given, the QV experiment shows that we might be able to increase profits by raising prices on niche products a few people love. The demand curves for the two types may look like the following:

Even though Vanilla sells much greater quantities than Edgy at lower prices, Edgy’s profit-maximizing price is higher than Vanilla’s because demand for Edgy is inelastic.

Anecdotally, the artist behind the “edgy” song Son of Chaos frequently mentions on social media that he’s not able to sell concert tickets priced at a mere $20 apiece. One possibility is that he lacks name recognition and hence should spend on marketing. But given that his work had such a deep but narrow basis of support in our voting experiment – both of the two most “expensive” songs and the most “expensive” album in terms of the average number of votes per supporter were written by him – I wonder whether he is that edgy type and hence should raise rather than lower prices to maximize profit.

More generally, if we don’t want to compromise on the product, it’s good to guesstimate the price elasticity of demand so that we can settle on the best business model (e.g. freemium vs. paid; if paid, how much).

Setting Types

If our type is flexible, we can decide whether we want to put out a vanilla or an edgy product. We could also market the same product in different ways, depending on whether it makes more sense to draw a broad but shallow base of support or a deep but narrow one.

In online dating, for example, those with more polarizing photos receive more messages, conditional on the level of attractiveness. In today’s tech investing, being contrarian, or at least packaging the investment thesis as such, also seems to be a winning strategy.

Winners under Alternative Voting Rules

From the social planner/organizer’s perspective, we can also see how voting rules affect the type of winner that emerges. Since QV records not only the direction but also the intensity of preferences, we have a rich set of data that can be transformed to simulate award winners under alternative voting rules.

Suppose a judge under QV casts seven votes for Song A, seven votes for Song B, one vote for Song C, and one vote for Song D, exhausting all 100 credits (49*2 + 2*2 = 100). Here’s how I’d transform her vote under the following alternative rules: one-person-one-vote, one-person-three-votes, Condorcet, Borda, and instant-runoff voting/IRV (also known as Alternative Vote/AV or Ranked Choice Voting/RCV).

| Voting Rule | Transformation | ||

|---|---|---|---|

| One-Person-One-Vote | A or B, each with 50% probability | ||

| One-Person-Three-Votes | {A, B, C} or {A, B, D}, each with 50% probability | ||

| Condorcet | {A > B > C > D}, {A > B > D > C}, {B > A > C > D}, {B > A > D > C}, each with 25% probability | ||

| Borda | same as above | ||

| Instant Runoff | same as above |

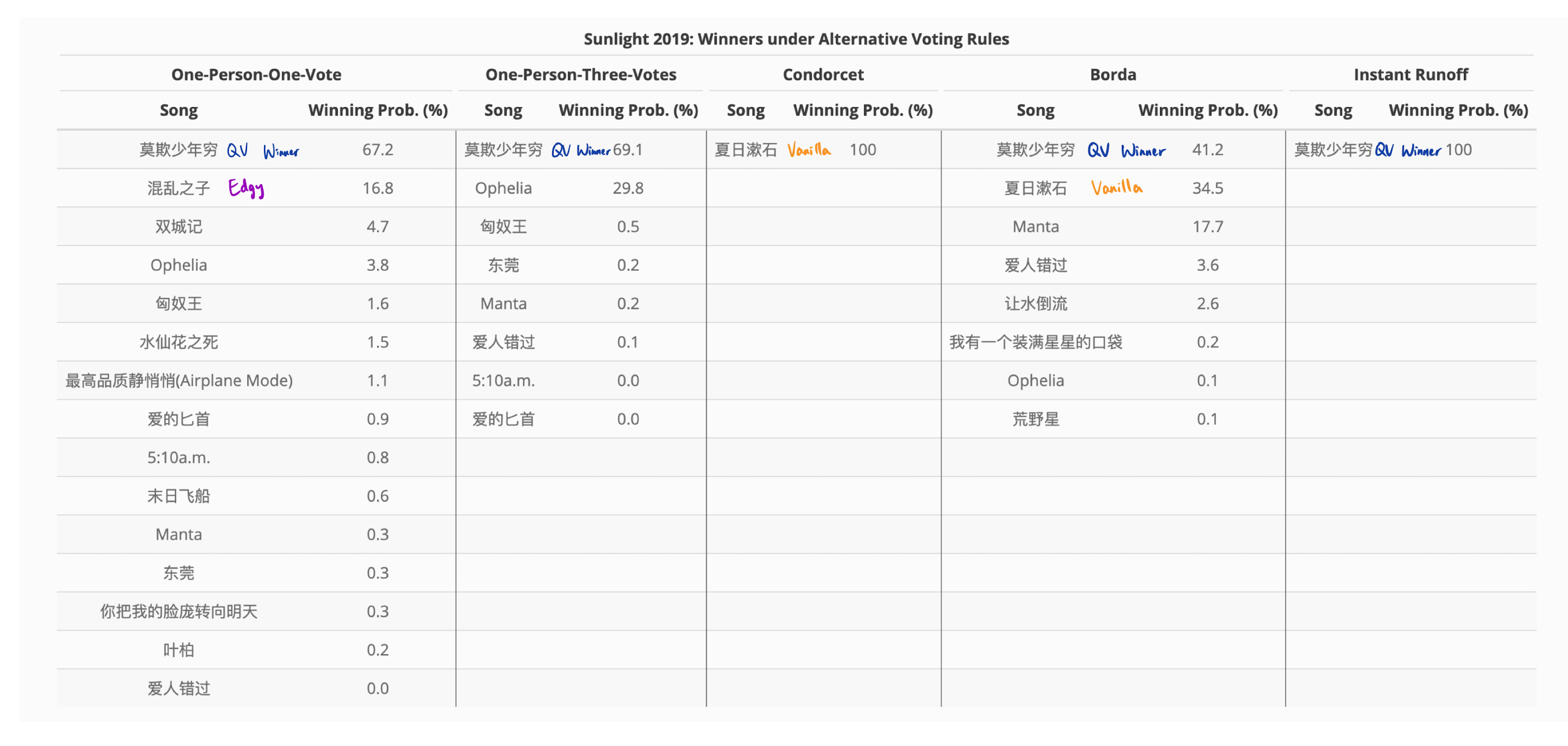

Below are the winning probabilities for each song (i.e. the probability that a song would finish first among the 121 nominees) under the alternative voting regimes. Again, the stochastic element comes from the fact that there may be ties in a judge’s QV votes.

Of course, judges would vote differently were we to change the voting rule – in our post-results survey, 23% of judges said they voted strategically – but if we take the QV votes as the judges' true preferences and assume that they vote sincerely under alternative designs, we see that only IRV, the voting rule now used in the Oscars as well as the New York City mayoral primary, produces the original QV winner for sure. Summer Cozy Rock, the Vanilla song liked (but not loved) by many, wins under Condorcet because it’s ranked higher in most pairwise comparisons. Similarly, the Borda count method also gives the Vanilla song a one-in-three chance of winning.

Son of Chaos, the Edgy song, has a chance to win only under the one-person-one-vote regime. It benefits from the fact that it’s ranked first by a small number of judges and that there are 121 candidates to split the votes.

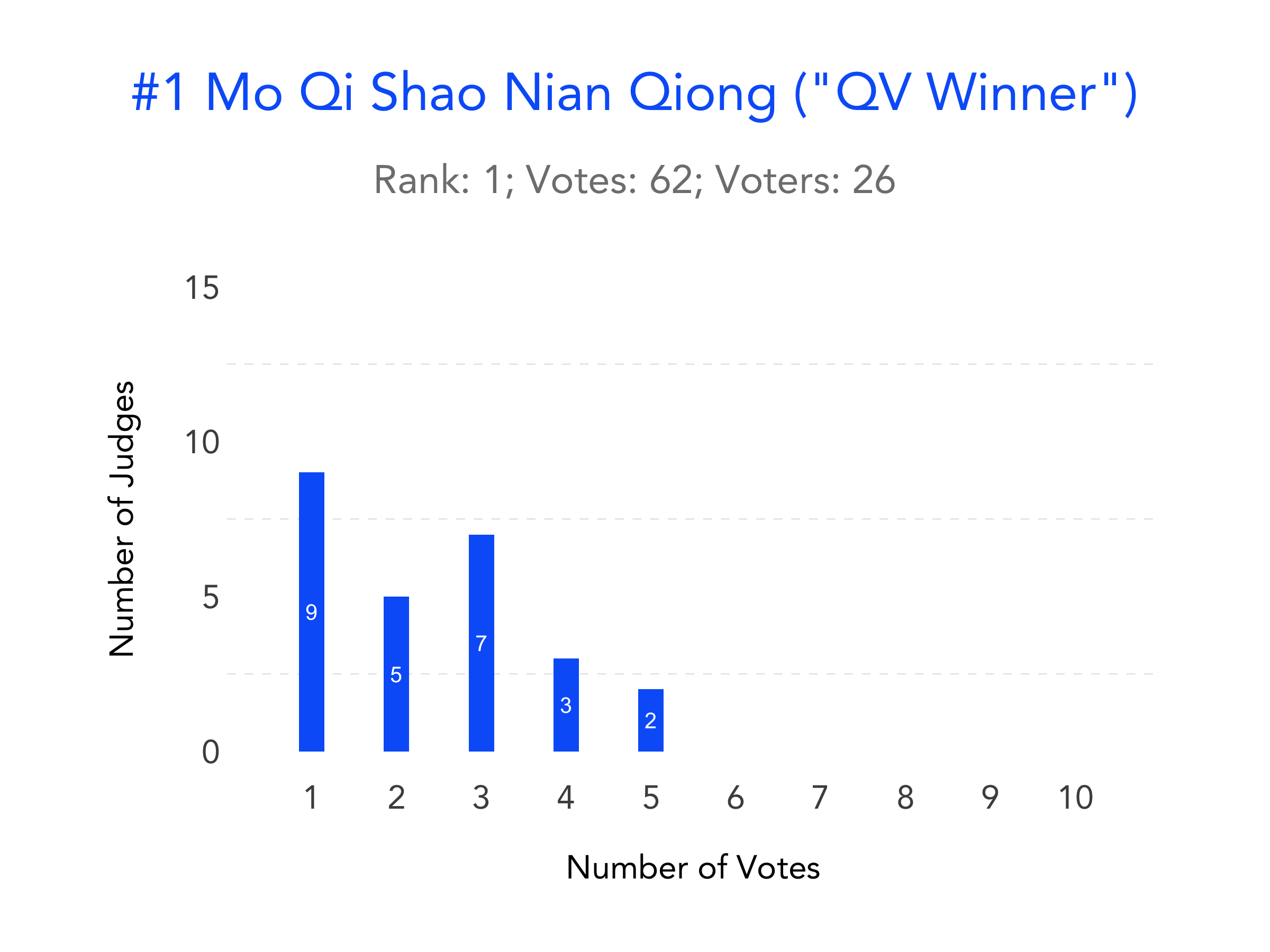

It’s worth looking at the original QV winner’s vote distribution more carefully – Mo Qi Shao Nian Qiong, a Hakka rock song, ranks first because it enjoys both breadth and depth of support. It’s exactly the kind of “consensus choice” QV and IRV are supposed to reward, and it also wins most of the time under one-person-one-vote, one-person-three-votes, and the Borda count. We are lucky to have such a song in the competition, or else we’d conclude from our simulation exercise that the winner was highly sensitive to the voting rule we chose.

How did the QV winner find the sweet spot between breadth and depth of support, you may ask? The answer: the band took a great song to a wildly popular reality show!

Listen to the song yourself, and you’ll see that the lead singer’s singing style is highly unusual and that the language (Hakka) is not even understood by 97% of Chinese people. The band’s commercial success and its success at our music award shows how important (and how difficult) it is to launch works of great artistic value into the mainstream.

Ideally, we should honor this type of candidate – one that enjoys both breadth and depth of support – in our art awards and in our political elections. But it’s often the case that candidates either fall into the Edgy or the Vanilla camp, if there are desirable candidates at all. Thus, the voting rule we choose could have a huge impact on the type of winner that emerges in the end. We must have a serious discussion about the type of winner we want to have before gathering together to vote on anything.

Perhaps for political elections we ought to go for the vanilla and for art awards the edgy. (In my view, the Oscars made a terrible decision when it switched to IRV in 2009 because consensus in art means “okay but boring.")

Expensive Songs and Social Identity

Another interesting pattern in the data is the association between social identity and “expensive” songs, i.e. songs with the highest average number of votes per supporter. I list the topics of the ten most “expensive” songs below:

- Inequality, personal liberty (Son of Chaos)

- Romantic love (written by the same singer-songwriter as #1)

- Hong Kong-mainland relations

- Hong Kong-mainland relations; Taiwan-mainland relations

- Life of factory workers in southern China

- Migration and homesickness

- Female identity; homosexuality; romantic love

- Homosexuality

- Life in the 1990s in China’s rust belt

- Conformity and deference to authority

It’s striking that more than half of the expensive songs deal with social issues and that only one is explicitly about romantic love. (Among the nominees, roughly 30% of the songs are about romantic love, and I expect this percentage to be a lot higher in the population of all songs that went on the market in 2019.)

With this list of “expensive” songs in hand, I can see how identity issues would attract the most fervent supporters if we were to implement QV in our political life. People care about their national, gender, racial identities, and they care a lot.

Flaws in the QV Experiment

Besides interesting patterns, I also encountered issues in the course of the running the experiment. I share three that may be relevant to future event organizers and social planners.

The Credit:Candidate Ratio

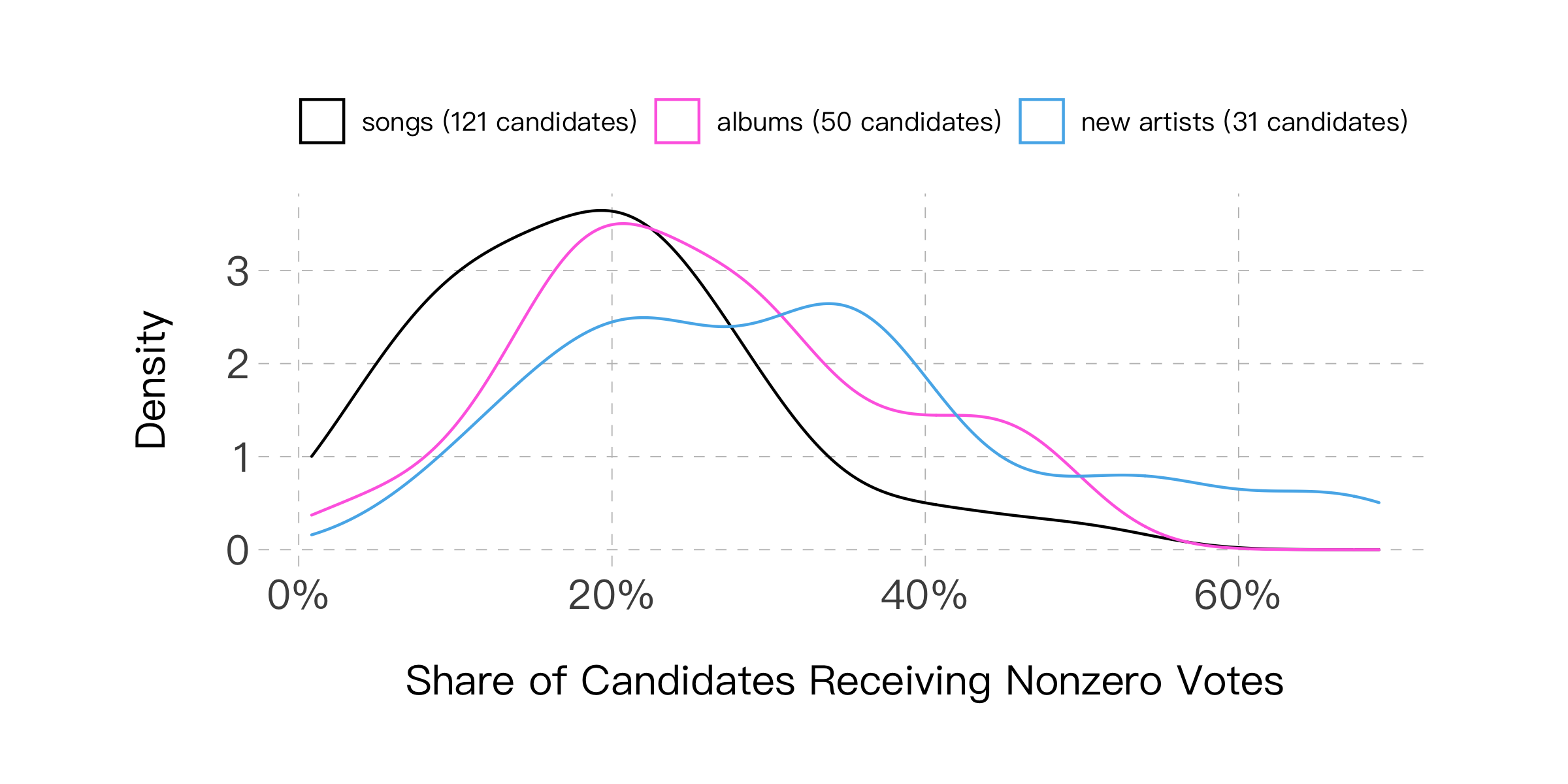

For simplicity’s sake, I gave judges 100 credits in each of the award categories. However, the voting outcome turned out to be highly sensitive to the credit:candidate ratio – award categories with a lower credit:candidate ratio (e.g. songs of the year) elicited people’s strong preferences, whereas award categories with a higher credit:candidate ratio (e.g. new artists of the year) elicited people’s weaker preferences as well.

Below is the distribution of the share of candidates receiving nonzero votes by judge. We see that the median judge gave 20% of nominated songs nonzero votes, but that percentage for albums of the year was 30% and, for new artists of the year, almost 40%.

There are multiple explanations as to why a larger credit:candidate ratio led to a larger share of candidates receiving nonzero votes, but the implication was that the correlation between name recognition and winning was a lot stronger in the “new artists” category than in the other two.

The most scientific way of showing the different degrees of correlation would be to retrieve the number of streams or the number of comments for each nominee and plot that on the x-axis (a proxy for name recognition) and the QV ranking on the y-axis. We would expect that the two are most correlated for the “new artists” nominees. Unfortunately, getting comment counts would take too much time – the Chinese streaming platforms don’t have an API – so you have to take my word for it.

If I were to rerun the experiment, I’d keep the number of credits proportional to the number of nominees – 100 for songs of the year, 50 for albums, 30 for new artists. The greater concern, however, is that QV outcomes seem highly sensitive to the credit:candidate ratio and that when the credit:candidate ratio is high, candidates can greatly increase their odds of winning by improving name recognition.

For example, if a voter is given 100 credits to allocate between 10 candidates, he might cast five votes each for his two favorite candidates and one vote each for the three candidates he has heard of. If the candidates people have strong preferences for are sufficiently different and if one candidate receives enough “why not” votes due to high name recognition, that candidate may just win. Furthermore, the social planner could change how likely this candidate wins simply by increasing or decreasing the number of credits given to voters.

I haven’t seen this issue discussed in the QV literature, and I’d love to explore voters' decision-making process under different credit:candidate ratios.

The Nomination Process

Another issue that only occurred to me until after I ran the experiment was how crucial the nomination process was to the final outcome.

In search and recommendation system design, there are two separate processes: retrieval (finding the relevant information) and ranking (putting the information in order). The QV experiment was mostly about the latter, but finding the right 100 or so candidates in the pool of hundreds of thousands of songs is much more difficult and perhaps just as important to the final outcome as the voting process.

In the context of Sunlight, I’m quite certain that we would’ve gotten a different list of “top 20 songs” had I chosen a different set of nominating judges, widened or narrowed the pool of judges, increased or decreased the number of nominees each judge could put forward… (It’s telling that of the 121 songs nominated, only 21 were mentioned by more than one judge.) In stark contrast to the “rigorous and scientific” voting round, every step in the nomination round was designed ad hoc.

The equivalent issue in politics would be deciding on what to put to a vote or who qualifies to be on the ballot. The usual solution today is signature gathering: aspiring office holders gather enough signatures to file for candidacy; proponents of referenda gather signatures to put an issue on the ballot. The cynical view is that with enough money, one could gather signatures and call for a referendum on anything. If people are voting with real money using QV, we’d be concerned that the rich would keep putting the same issue on the ballot, and the poor would eventually lose, not because they don’t have strong objections to the issue but because they’ve run out of money.

Deciding who and what goes on the ballot may be just as, if not more important than the precise ordering of candidates. We need to get serious (and scientific) about the nomination process as well.

Tractability

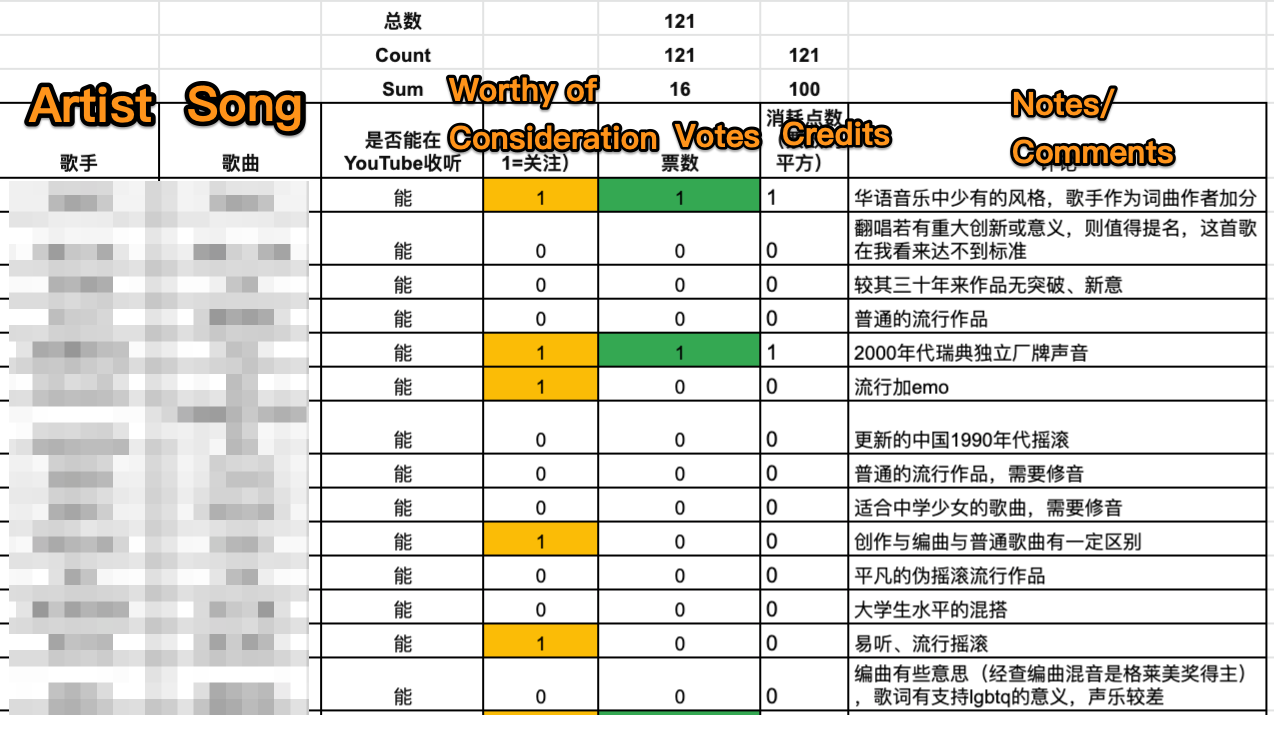

As I mentioned earlier, three of the 54 judges expressed confusion about QV after they had successfully completed a quiz involving a toy example. In our post-results survey, many more said that the voting process was cognitively demanding because they had to hold their impressions of hundreds of songs in theirs heads while constantly squaring and summing.

Below is a spreadsheet one of our judges created to help her keep track of the credit allocation. You can see that she first went through a round of approval voting (the orange column) and then started allocating credits based on the comments she had written next to each song.

Even though I’ve provided toy examples, music playlists, and Excel worksheets, our judges still found the voting process demanding. Our pool of judges comes from an extremely privileged socioeconomic background – 83% have college degrees, and the median annual spending on music exceeds $500. If this group finds a rather low-stakes award difficult using QV, how would we expect the general public to evaluate dozens of real-world issues and candidates by squaring and summing? If voting becomes intractable, might we worry that name recognition again dominates people’s decision-making process?

Meta-Thoughts on Creating and Maintaining on Online Community

The Recruitment Funnel

For future reference, here’s the recruitment funnel for Sunlight 2019:

- Generic invitation sent to ~200 people

- ~80 agreed to participate

- 54 completed a deliberately protracted and complex voting process that lasted around two months

I shared a sign-up survey when we promoted our results in October 2020. More than 500 people completed the 15-minute survey and said they wanted to become judges.

The Reach

I was pleasantly surprised by the feedback I received – hundreds of people left encouraging comments and asked about future plans for the award; executives at China’s largest streaming platforms and independent record labels reached out… I had been posting on my Chinese social media for some time but was never part of any music communities. My takeaway from running Sunlight is that if you create something valuable to a community, that community will come and find you. In my case, I think many people – both ordinary music lovers and industry insiders – longed for transparency and curation, and they found both in Sunlight.

Dimensions of Musical Taste

(I’m keeping this bonus section last because it may appeal only to those that read Chinese.)

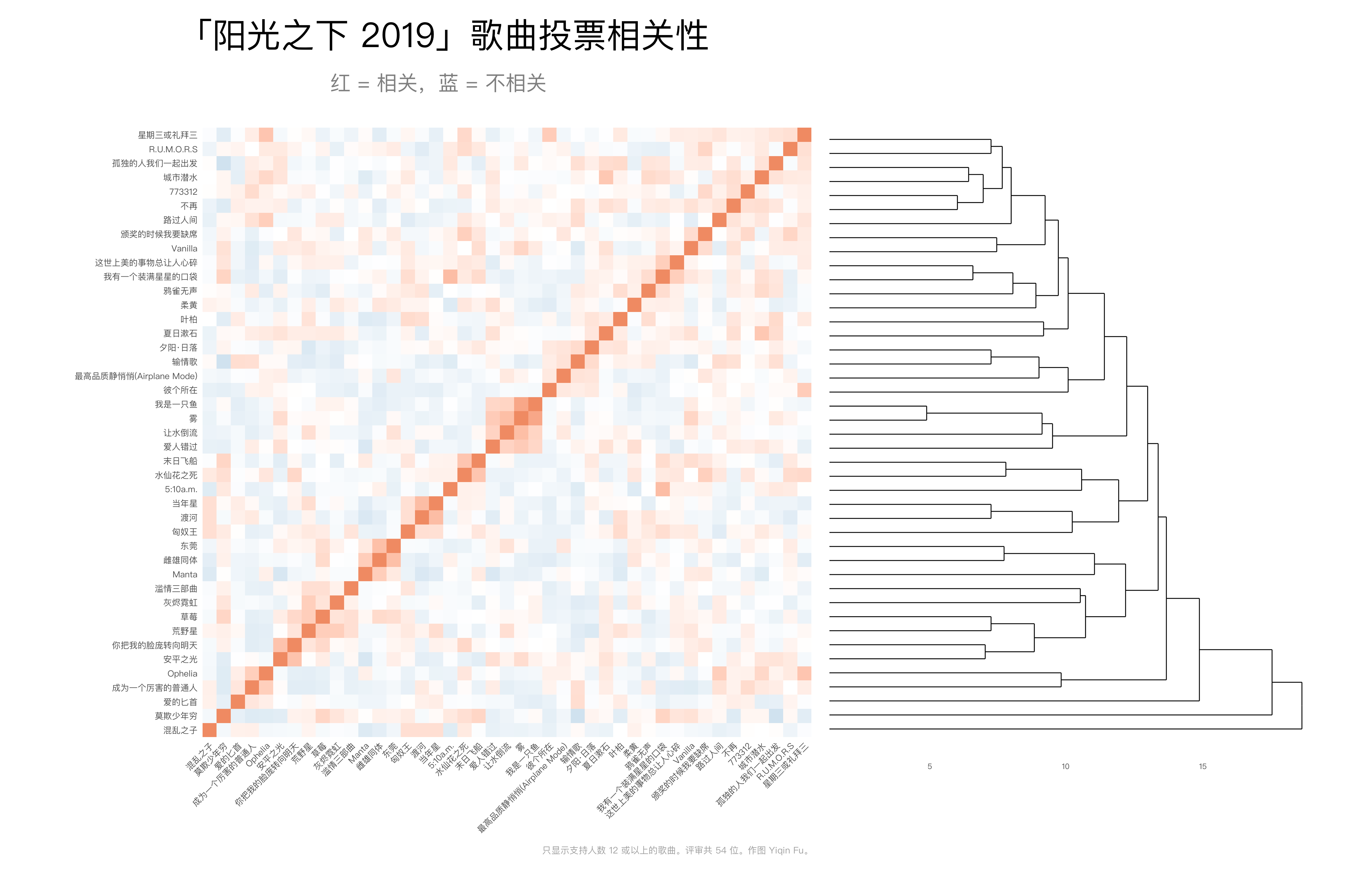

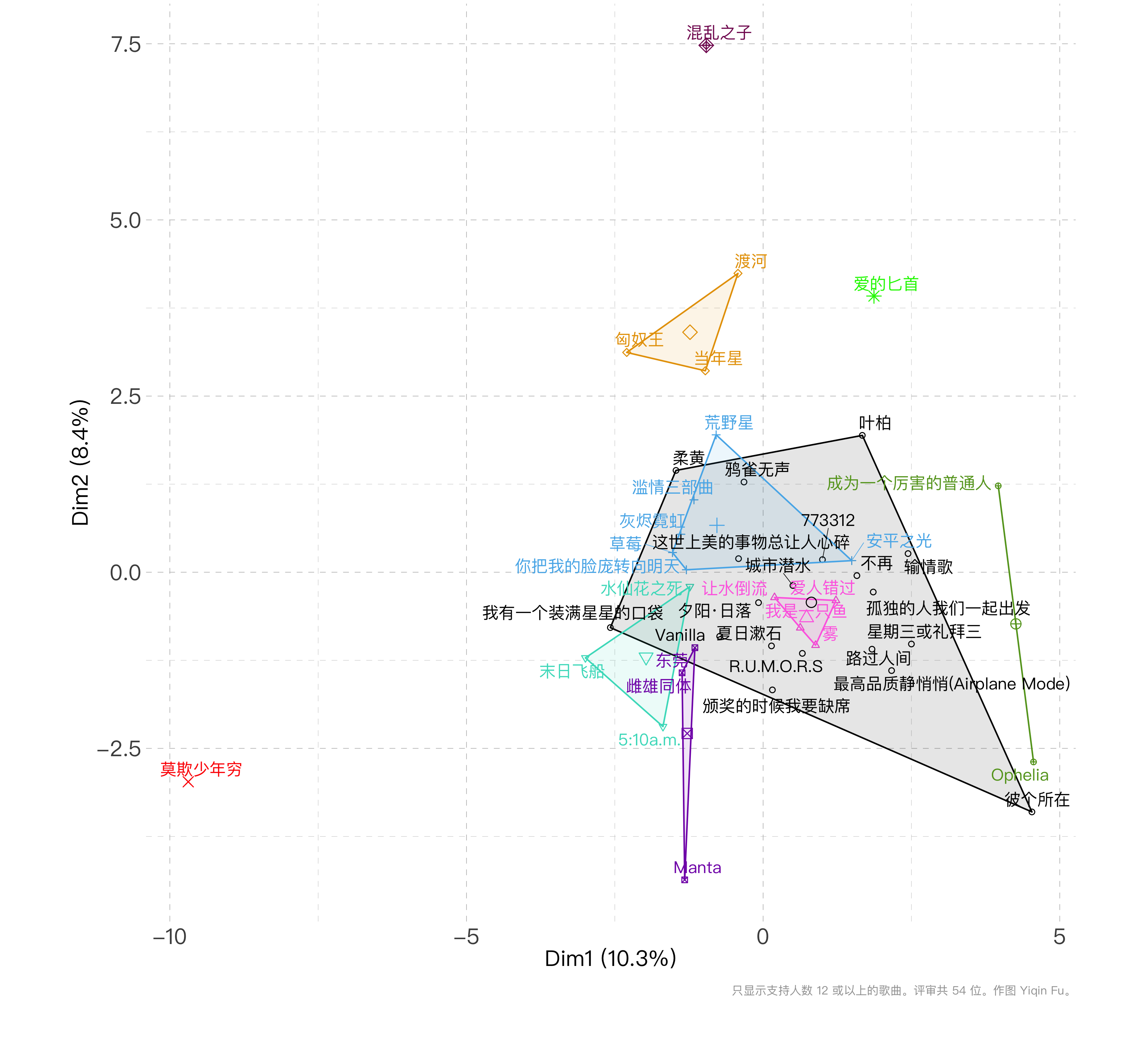

The award also revealed the many dimensions of people’s musical taste. I couldn’t perform any fancy analysis (e.g. PCA) because we had 121 rows (the number of nominated songs) but only 54 columns (the number of judges). However, correlation and clustering still gave us some hints.

Below are a few dimensions that I could make out based on the figures and some domain knowledge:

- Similarity in instrumental style (e.g. this song and this song sound almost identical!)

- Similarity in lyrical style (e.g. this song that talks about Buddhism and this song that talks about coming home both have sparse but poetic lyrics)

- Appearances on the same reality shows (this song and this song don’t have much in common, but the artists went on the same popular reality show)

- Associations with the same movies and dramas (this song and this song have nothing in common, but they appeal to the same demographic as viewers of this drama)

As discussed in a previous blog post on fame in the 20th century, the visual medium dominates cultural production today because it makes a stronger impact on the audience compared to text or audio. Young people may no longer be discovering musicians via radio or streaming but more so through reality shows, movies, and Tiktoks (all visual media).

Taste is also generational. Our younger judges liked the more upbeat songs like this and this, while our older judges appreciated songs that talked about factory work in Guangdong and life in China’s rust belt.

I made many like-minded Internet friends and learned a lot from running the experiment – about music, about QV, about project management. Please feel free to get in touch if you’re interested in any aspect of what I’ve written!

-

Philosophers, social scientists, and mathematicians have long known that voting rules have consequences – they affect the type of winner that emerges, which then in turn affect participant incentives.

The Oscars, for example, switched from simple plurality voting (one person one vote; the film with the most votes wins) to instant runoff voting (IRV) in 2009. IRV is a ranked-choice voting system that allows people to express a richer set of preferences and re-distributes their weaker preferences until only one film remains. The intention of this rule change was to favor films liked by many over those loved by a few. Building consensus is good, right?

But the downside is that the Oscars may be awarding mediocre rather than edgy work – Three of the ten Best Picture winners in the past decade were about Hollywood itself. (And more importantly, my favorite film Boyhood lost in most categories to what I’d consider good but less exciting work.)

My dream is for the Oscars and all other awards to share their data publicly. Then they can do away with expensive auditors and dismiss fraud allegations. Open data would even allow us to simulate our own winners under different voting rules. For example, I’d love to know whether Boyhood had the largest number of first-choice votes in 2015 but lost to Birdman because it wasn’t enough people’s second favorite. ↩︎

-

My understanding of why we use a quadratic function is that the first derivative of the utility function would be linear and hence easier to work with. ↩︎

-

The only eligibility criterion was that the song or the album had to be a studio recording targeted primarily at a Chinese-speaking audience. Among the nominated works were jazz and electronic compositions by Chinese American artists and choir music from a Shanghai charity group. A fifth of the nominated songs were sung in languages other than Mandarin: Hakka, Hokkien, Cantonese, the Ningbo dialect, Japanese, and English. 45% of the artists were born outside of mainland China, and roughly 70% sung about topics other than romantic love. ↩︎

-

I specifically looked for people that blogged about music more generally as opposed to one or two celebrity musicians. ↩︎

-

A surprise to me was that vast majority of people who blogged about Chinese music were men. The m:f ratio before I started deliberately recruiting women judges was 4:1. ↩︎